|

Chaion Analytics |

Entropy

in Statistical Mechanics

Copyright ï³°an> 2011 by Robert Finkel |

||||||

|

ã°¡n style="font:7.0pt "Times New Roman"">

Probabilities ã°¡n style="font:7.0pt "Times New Roman"">

Averaging ã°¡n style="font:7.0pt "Times New Roman"">

Entropy ã°¡n style="font:7.0pt "Times New Roman"">

Moles &

Gas Constant ã°¡n style="font:7.0pt "Times New Roman"">

Thermodynamics ã°¡n style="font:7.0pt "Times New Roman"">

Partition

Function ã°¡n style="font:7.0pt "Times New Roman"">

Gibbs Entropy Copyright ï³°an>

2011 by Robert Finkel |

Entropy

is intimately connected to statistical concepts and is often one of the

functions most readily found from molecular models. Entropy S appears in the fundamental thermodynamic

expression Three forms of

entropy are widely used in thermal physics. The thermodynamic or classical

form is Most

traditional presentations of statistical mechanics begin by expressing

entropy in terms of W,

the number of equally probable microscopic states that constitute a given macroscopic

state. For example, a ᣲoscopic㴡te

where 2 people (molecules) are seated in any 6 chairs can be achieved in W = 30 飲oscopicê ways. Entropy

is a technical measure of randomness and this is apparent in the statistical

form. The entropy

Our

objective on this page is to use Eq.(1) to derive

state equations for ideal gas. A thermodynamic state equation like the

familiar De Broglie recognized that all matter exhibits both

wave and particle properties. The

expression deduced by de Broglie applies to all matter including the most familiar particles; photons,

electrons, protons, and neutrons. Each object is both a particle and a wave

and shows one property or the other depending upon the circumstances.

De Broglie expressed the wavelength l of the

matter-wave in terms of the momentum p

of the particle. Wavelength is Planck's constant h divided by p,.

You can use this derive some approximate expressions

for the number of microstates W in an ideal gas. While these are approximate, they

enable us to derive some correct state equations. This can be attributed to

the fact that unimportant approximate particulars are washed out by the averaging process

while the salient features survive to be reflected in the macroworld. A

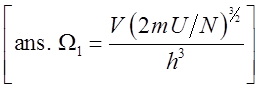

crude measure of the number of microstates, denoted ã°¡n

style='font:7.0pt "Times New Roman"'> Evaluate microstates The

total number of microstates for N particles is the product of the of all

the independent individual microstates,

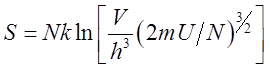

This

is not pretty, but it is easy to extract from it all possible macroscopic information

regarding ideal gas. We do this with

equations from thermodynamics involving partial

derivatives. Partial Derivatives Consider

a function S that depends on more

than one independent variable, say V

and U. We often need to

differentiate S with respect to one

variable, say V, while treating any

other independent variables (like U)

as if they are constants. This is the partial

derivative of S with respect to V

and is denoted as

ã°¡n

style='font:7.0pt "Times New Roman"'> Given a function

[ans. Ideal Gas Ideal

gas derivations are favorites to illustrate applications of statistical

mechanics. Two useful thermodynamic

equations are developed in our Thermodynamics

page. Here I present them as given:

ã°¡n

style='font:7.0pt "Times New Roman"'> Apply the thermodynamic equations

above to the entropy

|